News

Click on a headline to read the teaser.

-

SmoothE Wins the ASPLOS 2025 Best Paper Award

SmoothE: Differentiable E-Graph Extration wins the Best Paper Award at the 2025 ACM International Conference on Architectural Support for Programming Languages and Operating Systems!

Read More ›

-

Cypress Wins the ISPD 2025 Best Paper Award

Our paper Cypress: VLSI-Inspired PCB Placement with GPU Acceleration is awarded the Best Paper Award at the International Symposium on Physical Design (ISPD) 2025!

Read More ›

-

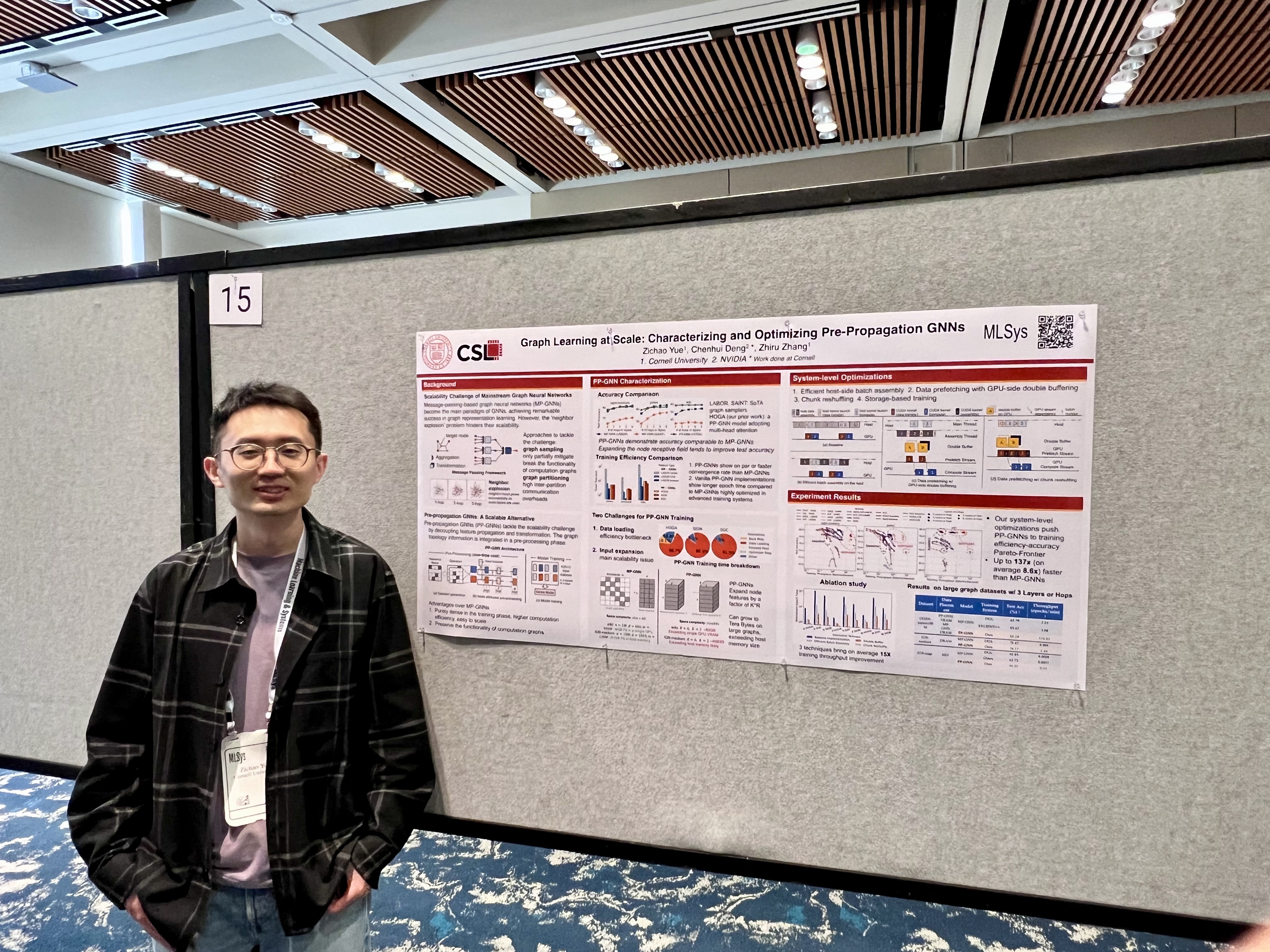

Paper Accepted to MLSys 2025: Scaling Graph Learning with Pre-Propagation GNNs

We’re excited to announce that our paper, Graph Learning at Scale: Characterizing and Optimizing Pre-Propagation GNNs, has been accepted to The Annual Conference on Machine Learning and Systems (MLSys) 2025!

Read More ›

-

Congratulations to Hanchen Jin on His PhD Graduation!

Please join us in congratulating Hanchen Jin on earning his PhD with his thesis Analytical Modeling and Efficient Implementation for Specialized Hardware Acceleration of Sparse Applications!

Read More ›

-

Nikita Lazarev Passes His Thesis Defense!

We are thrilled to celebrate the graduation of Nikita Lazarev, whose doctoral research has made significant strides in improving the efficiency and reliability of latency-critical cloud services.

Read More ›

-

Shaojie Successfully Defends His PhD Thesis!

Congratulations to Dr. Shaojie Xiang for successfully defending his PhD thesis: Accelerator Programming with Decoupled Data Placement for Heterogeneous Architectures!

Read More ›

-

ARIES Paper Accepted to FPGA 2025 and Nominated for Best Paper!

In collaboration with Brown University, ARIES: An Agile MLIR-Based Compilation Flow for Reconfigurable Devices with AI Engines, has been accepted to the International Symposium on Field-Programmable Gate Arrays 2025 and nominated for the Best Paper Award!

Read More ›

-

SmoothE Paper Accepted to ASPLOS 2025!

We’re excited to share that the paper SmoothE: Differentiable E-Graph Extraction has been accepted to ASPLOS 2025, one of the premier venues at the intersection of architecture, programming languages, and systems!

Read More ›

-

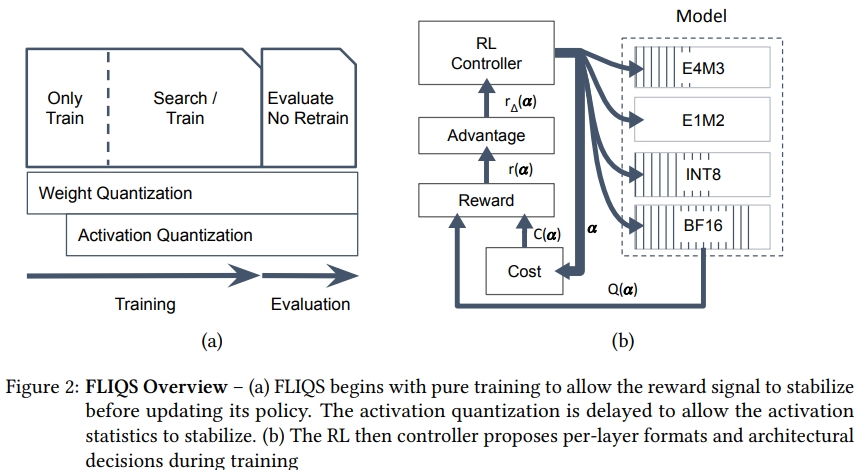

AutoML 2024 awards Best Paper Award to our paper FLIQS

We are thrilled to announce that FLIQS: One-Shot Mixed-Precision Floating-Point and Integer Quantization Search wins the Best Paper Award at the 2024 International Conference on Automated Machine Learning!

Read More ›

-

Hongzheng Wins the 3rd Place in PLDI 2024 Student Research Contest

-

Our Pangenome Layout Paper is Accepted by SC24.

Our paper Rapid GPU-Based Pangenome Graph Layout is accepted by International Conference for High Performance Computing, Networking, Storage, and Analysis (SC).

Read More ›

-

Hongzheng Chen is Selected as a 2024 MLCommons Rising Star!

We are pleased to share that Hongzheng Chen is selected as a 2024 MLCommons Rising Star!

Read More ›

-

Prof. Zhang Receives the 2023 AWS AI Amazon Research Award!

We are thrilled to share that Professor Zhang wins the 2023 AWS AI Amazon Research Award!

Read More ›

-

Prof. Zhang Wins the 2023 Intel ORA!

We are thrilled to share that Professor Zhang is among the esteemed recipients of Intel's 2023 Outstanding Researcher Awards!

Read More ›

-

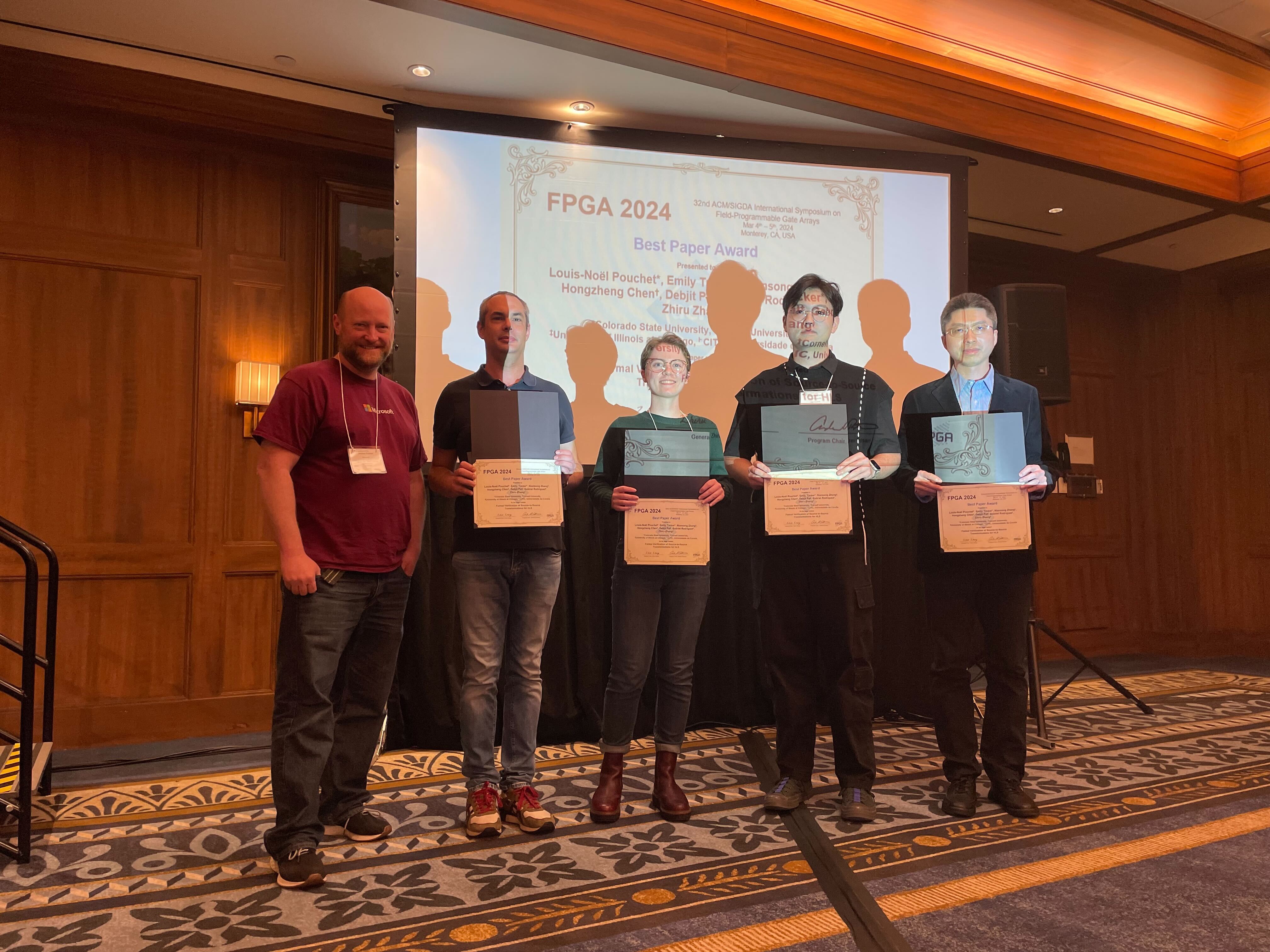

Niansong and Hongzheng Win the Best Paper Award at FPGA'24!

Congratulations to Louis-Noël Pouchet and all co-authors including Niansong Zhang and Hongzheng Chen!

Read More ›

-

Chenhui Successfully Defends His PhD Thesis!

Congratulations to Dr. Chenhui Deng for passing his defense of "Accurate and Efficient Representation Learning on Large-Scale Graphs"!

Read More ›

-

Congratulations to Yichi on a Successful Ph.D. Defense!

Dr. Yichi Zhang successfully defends his thesis entitled "Co-Design of Binarized Deep Learning"!

Read More ›

-

Yixiao for wins the 2022-23 Outstanding TA Award!

Congradulations to Yixiao Du for being recognized for his great work as a teaching assistant for ECE 2300 during fall 2022. he is this year's sole recipient of the ECE Outstanding PhD TA Award!

Read More ›

-

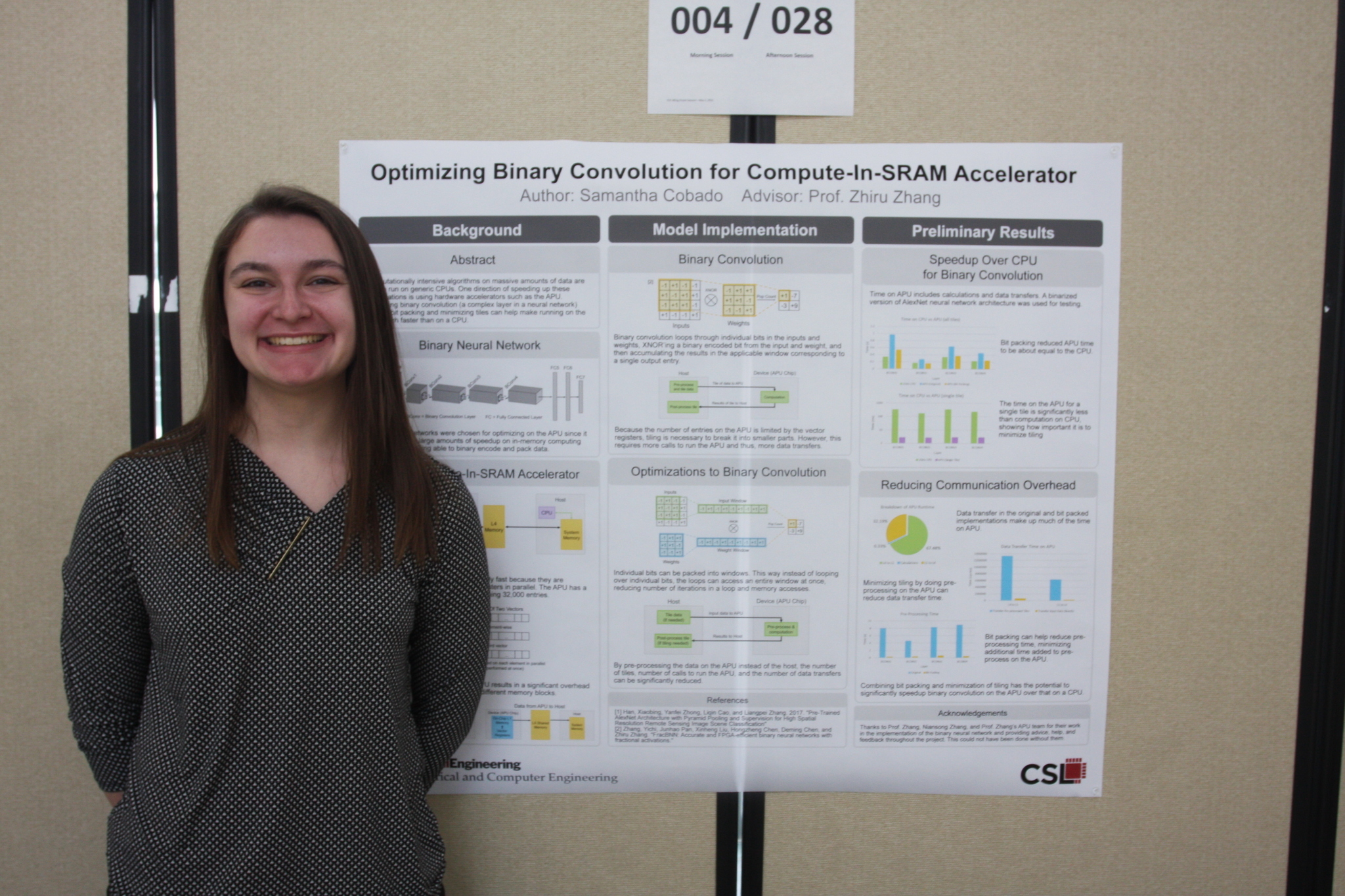

Samantha Cobado Wins “Best in AI” at ECE M.Eng Poster Session!

Samantha Cobado’s poster on binarized neural networks earned “Best in AI” at the 2023 ECE M.Eng. Poster Session for her work on compute-in-SRAM accelerator design.

Read More ›

-

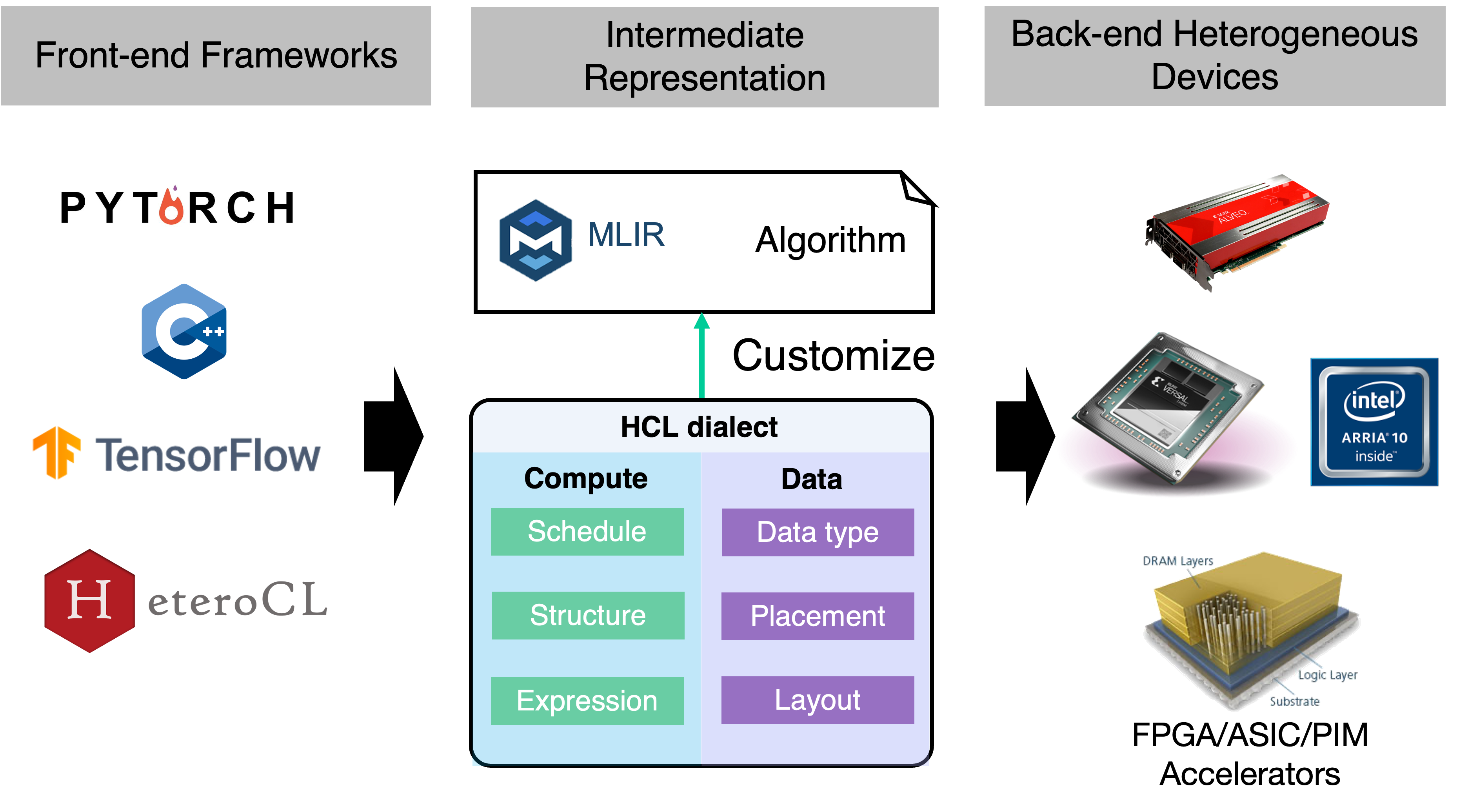

HeteroCL v0.5 released!

The latest update to HeteroCL, our groups programming infrastructure for heterogeneous computing, has been released.

Read More ›

-

Zhang Research Group Joined the ACE Center for Evolvable Computing

The Zhang Research Group will lead a major research theme at the newly launched ACE Center for Evolvable Computing, advancing programmable and scalable hardware accelerators as part of the JUMP 2.0 initiative.

Read More ›

-

Prof. Zhiru Zhang elevated to IEEE Fellow

Prof. Zhiru Zhang joins the newly elevated class of 2023 of IEEE Fellows for his contributions to FPGA high-level synthesis and machine learning accelerators.

Read More ›

-

GARNET is accepted to LoG'22 as a spotlight paper.

Chenhui Deng and Prof. Zhiru Zhang, along with their co-authors, Xiuyu Li and Zhuo Feng, will publish their work, GARNET, as a part of this year's proceedings of the Learning on Graphs Conference (LoG).

Read More ›

-

Ecenur Successfully Defends Her PhD Dissertation!

Congratulations to Dr. Ecenur Üstün, who successfully defended her thesis on “Learning-Assisted Techniques for Agile Arithmetic Design on FPGAs"!

Read More ›

-

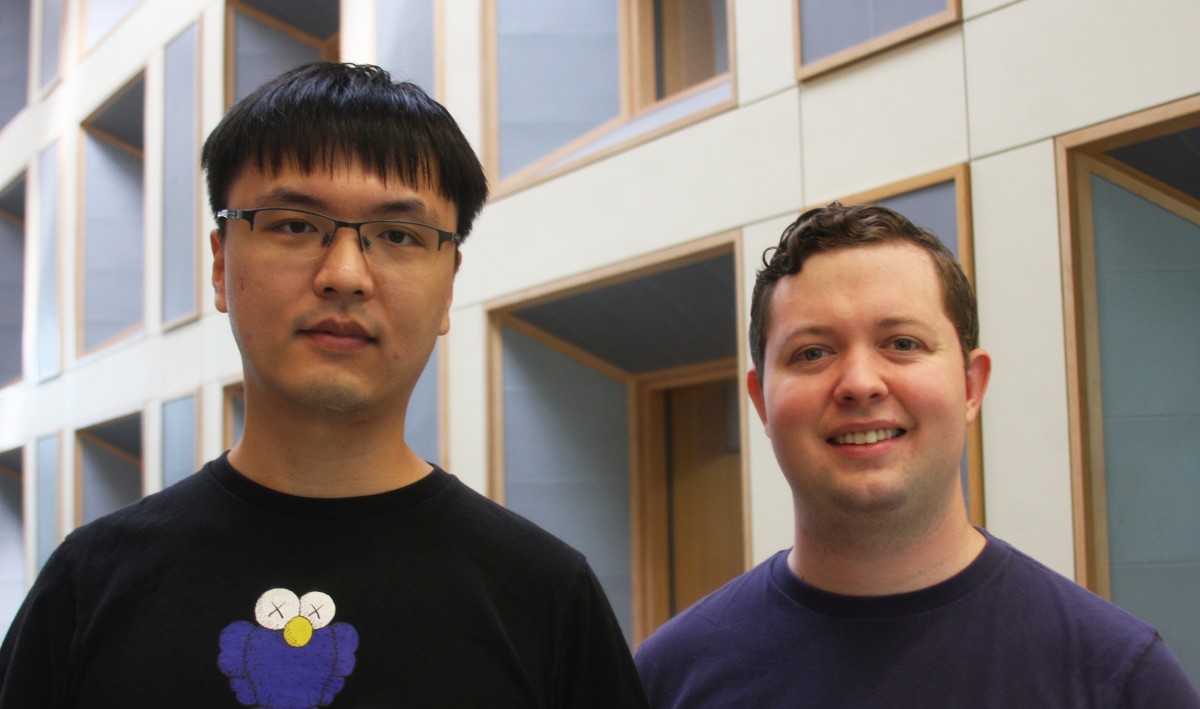

Chenhui and Andrew Win 2022 Qualcomm Innovation Fellowship!

Congratulations to Chenhui Deng and Andrew Butt on winning the 2022 Qualcomm Innovation Fellowship (QIF). for their work on power inference using self-supervised learning!

Read More ›

-

Zhang Group Organized FCCM 2022 at Cornell Tech

FCCM 2022 was held at Cornell Tech with organizational support and participation from the Zhang Group.

Read More ›

-

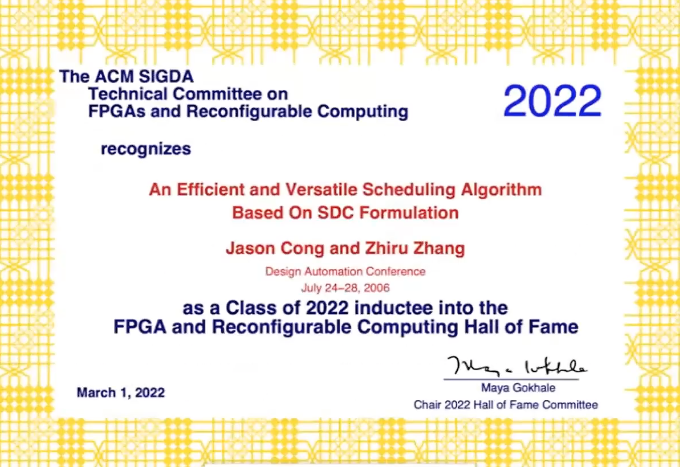

SDC scheduling inducted into ACM/SIGDA TCFPGA Hall of Fame!

SDC scheduling, a core innovation in modern HLS tools, earns Hall of Fame recognition for its lasting academic and industrial impact.

Read More ›

-

RapidStream Received the FPGA'22 Best Paper Award!

RapidStream wins Best Paper at FPGA 2022 for its innovative approach to accelerating FPGA design workflows.

Read More ›

-

Xiuyu Earns 2022 CRA Honorable Mention!

Our undergraduate Xiuyu Li received an honorable mention for the 2022 CRA Outstanding Undergraduate Researcher award.

Read More ›

-

Two Papers Accepted to FPGA 2022!

Contratulations to all the authors of HeteroFlow and HiSparse for publishing their work in International Symposium on Field-Programmable Gate Arrays (FGPA) 2022!

Read More ›

-

Yuan and Sean Successfully Defend their PhD Thesis!

Congraduations to Dr. Yuan Zhou and Dr. Sean Lai for passing their PhD thesis defense!

Read More ›